Dado que el soporte de Windows 10 finaliza oficialmente en octubre de 2025, muchos usuarios están mejorando a Windows 11 para mantenerse protegidos con actualizaciones de seguridad. Algunos pueden sorprenderse, sin embargo, al encontrar un nuevo compañero de IA esperándolos: Copilot.

Posicionado como el esfuerzo más ambicioso de Microsoft en IA generativa, Copilot está ahora profundamente integrado en Windows 11, Edge, Bing y la suite Microsoft 365. Es la pieza central de la asociación multimillonaria de Microsoft con OpenAI (el creador de ChatGPT y ChatGPT Atlas), cuyos modelos GPT impulsan la inteligencia de Copilot. Combinado con el lanzamiento de los PC Copilot+ —equipados con una tecla Copilot dedicada— todo esto muestra cuán serio es Microsoft a la hora de dirigir Windows hacia un futuro impulsado por IA.

Sin embargo, no todos dan la bienvenida a este ayudante siempre presente, que recuerda a Clippy pero mucho más persistente. La estrecha integración de Copilot plantea preocupaciones de privacidad y seguridad, al igual que cuando LinkedIn, propiedad de Microsoft, comenzó a entrenar IA utilizando perfiles públicos y datos de actividad, o cuando el plan de Google de incrustar profundamente su asistente de IA Gemini en Android para reemplazar al Asistente de Google.

Solo los usuarios empresariales de Microsoft 365 y los administradores de TI pueden eliminar completamente Copilot de Windows 11. En todos los demás casos, como usuarios con suscripciones personales o familiares, o sin ninguna suscripción a Microsoft 365, solo hay formas de limitar sus funciones y atenuar su visibilidad.

- Cómo desactivar la IA de Copilot

- Cómo eliminar Microsoft 365 Copilot (suscripción empresarial)

- Cómo desactivar Microsoft 365 Copilot (suscripción personal o familiar)

- Cómo ocultar los iconos de Copilot en Windows 11

- Cómo desactivar los datos y las funciones de personalización en la aplicación web de Copilot

- Cómo desactivar el entrenamiento de modelos de Gaming Copilot

- Cómo eliminar tu cuenta de Copilot

- ¿Qué es Microsoft Copilot?

- ¿Cuáles son los riesgos de privacidad de Microsoft Copilot?

- ¿Cuáles son los riesgos de seguridad de Microsoft Copilot?

- Una alternativa privada a Copilot y Microsoft

Cómo desactivar la IA de Copilot

Dependiendo de cómo uses Copilot, aquí te explicamos cómo puedes eliminarlo de tu ordenador, ajustar sus ajustes o reducir su presencia:

Cómo eliminar Microsoft 365 Copilot (suscripción empresarial)

Si eres un usuario empresarial, ve a Ajustes → Aplicaciones → Aplicaciones instaladas, selecciona Copilot y haz clic en Desinstalar.

Los administradores de TI pueden eliminar la aplicación Copilot usando el siguiente comando en PowerShell:

$packageFullName = Get-AppxPackage -Name "Microsoft.Copilot" | Select-Object -ExpandProperty PackageFullName

Remove-AppxPackage -Package $packageFullName

Cómo desactivar Microsoft 365 Copilot (suscripción personal o familiar)

- Abre cualquier aplicación de Microsoft 365 (Word, Excel, PowerPoint o OneNote).

- Ve a Archivo → Opciones → Copilot.

- Desmarca la casilla Activar Copilot.

Repite estos pasos para cada aplicación de Microsoft en la que quieras desactivar Copilot.

Si el ajuste Activar Copilot no existe en ninguna de las aplicaciones de Microsoft:

- Abre Word, Excel, PowerPoint o OneNote.

- Ve a Archivo → Cuenta → Privacidad de la cuenta → Administrar ajustes → Experiencias conectadas.

- Desmarca la casilla Activar experiencias que analizan tu contenido.

- Reinicia la aplicación.

Solo necesitas hacer esto una vez, ya que el nuevo ajuste de privacidad se aplicará automáticamente en todas las aplicaciones de Microsoft 365 vinculadas a tu cuenta.

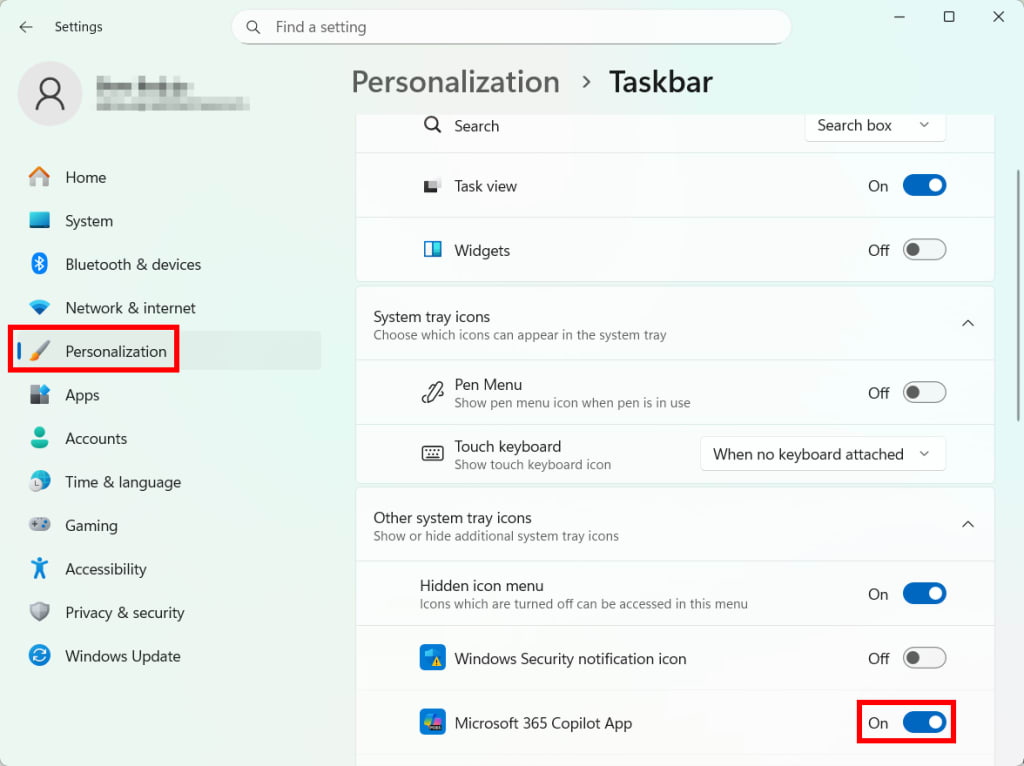

Cómo ocultar los iconos de Copilot en Windows 11

Puedes desactivar los iconos de Copilot en Windows, pero no puedes eliminar completamente la función de tu ordenador. Estos pasos solo desactivan los elementos visuales y de personalización de Copilot, por lo que aún podrás Acceder a la aplicación.

Para ocultar el botón de Copilot de tu barra de tareas:

- Ve a Ajustes → Personalización → Barra de tareas.

- Busca y desactiva Copilot.

Si usas aplicaciones que tienen Copilot integrado, tendrás que desactivarlo por separado en cada aplicación. Por ejemplo, en el Bloc de notas, ve a Ajustes → Funciones de IA y desactiva Copilot.

- Reinicia tu ordenador para que los cambios surtan efecto.

Cómo desactivar los datos y las funciones de personalización en la aplicación web de Copilot

- Selecciona tu nombre de perfil o foto → tu cuenta → Privacidad.

- Desactiva las siguientes opciones:

- Entrenamiento de modelos en texto

- Entrenamiento de modelos en voz

- Personalización y memoria

- Selecciona Eliminar memoria para eliminar inmediatamente lo que Copilot recuerda sobre ti.

- Haz clic en Exportar o eliminar historial. Esto abrirá una página del navegador donde tendrás que iniciar sesión con la cuenta de Microsoft que usas para Copilot.

- Selecciona Eliminar todo el historial de actividad para cada una de estas categorías:

- Historial de actividad de la aplicación Copilot

- Copilot en aplicaciones de Microsoft 365

- Copilot en aplicaciones de Windows

- Selecciona tu nombre de perfil o foto → Conectores.

- Desactiva todo, incluido OneDrive, Outlook, Gmail, Google Drive y Google Calendar.

Ten en cuenta que si tus datos ya se han utilizado para el entrenamiento de modelos, no hay forma de deshacerlo.

Cómo desactivar el entrenamiento de modelos de Gaming Copilot

- En Windows, presiona Win + G para abrir la Game Bar.

- Ve a Ajustes → Ajustes de privacidad → Gaming Copilot.

- Desactiva Entrenamiento de modelos en texto.

- Para ocultar el widget de Gaming Copilot, elimínalo de la lista de widgets de la Game Bar.

Actualmente, no hay forma de eliminar completamente Gaming Copilot de la Xbox Game Bar. Además, no puedes evitar que use tus interacciones de voz para ayudar a entrenar sus modelos de IA. Si te importa la privacidad, lo más seguro es no interactuar con Gaming Copilot en absoluto.

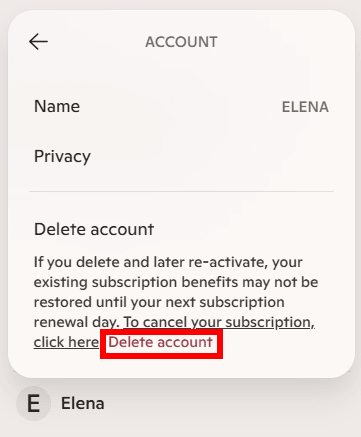

Cómo eliminar tu cuenta de Copilot

En la aplicación web de Copilot, selecciona tu nombre de perfil o foto → tu cuenta → Eliminar cuenta.

Eliminar tu cuenta de Copilot no elimina tu cuenta de Microsoft, y aún puedes usar Copilot sin iniciar sesión.

Si prefieres no depender de Copilot en absoluto, considera cambiar a un asistente de IA de privacidad(ventana nueva) que nunca registra tus datos, los usa para el entrenamiento de modelos o los comparte con terceras partes.

¿Qué es Microsoft Copilot?

Copilot es la línea de asistentes de IA de Microsoft integrados en sus productos y servicios, como Word, Excel, PowerPoint, Outlook, Teams y Windows. Utiliza grandes modelos de lenguaje (LLM) basados en las propias tecnologías de IA de Microsoft y los GPT de OpenAI —como GPT-4o, el mismo que impulsa ChatGPT.

Al igual que los LLM, Copilot puede ayudarte a ser más productivo, creativo y eficiente al comprender las indicaciones en lenguaje natural, analizar datos, generar y resumir contenido y proporcionar sugerencias basadas en el contexto.

Microsoft proporciona Copilot en varias versiones, dependiendo de si lo estás utilizando personal o profesionalmente, incluyendo:

- Microsoft Copilot — un asistente de IA personal gratuito para las tareas cotidianas. Está disponible como una aplicación de Windows 11 (instalada por defecto), una aplicación web y una aplicación para macOS.

- Gaming Copilot — un asistente de IA personal gratuito para juegos en Windows, que toma capturas de pantalla para entender qué está sucediendo en un juego y ofrecer consejos, similar a cómo Recall toma instantáneas de tu actividad de escritorio.

- Microsoft 365 Copilot — se integra en Word, Excel, PowerPoint, Outlook y Teams para usar tus datos a través de Microsoft Graph, una plataforma que conecta tus datos como correos electrónicos, calendario y documentos.

¿Cuáles son los riesgos de privacidad de Microsoft Copilot?

Microsoft mantiene fuertes protecciones de privacidad para Copilot en entornos empresariales como Microsoft 365, pero las cuentas de consumidores se enfrentan a una realidad muy diferente(ventana nueva):

Tus datos pueden ser utilizados para anuncios y elaboración de perfiles

Los servicios de Copilot en cuentas de consumidores de Microsoft están conectados al ecosistema publicitario de Microsoft. Esto significa que tus interacciones pueden influir en los anuncios y recomendaciones que ves. Por ejemplo, si le preguntas a Copilot sobre ofertas de viaje, podrías ver más tarde anuncios de vuelos o hoteles.

Aunque puedes desactivar los anuncios personalizados en los ajustes de privacidad de tu cuenta de Microsoft, la empresa conserva esos datos de interacción y puede usarlos de forma anónima para otros fines, como mejorar la segmentación de anuncios para otros usuarios de Copilot con perfiles similares al tuyo.

Mantienes la propiedad, pero Microsoft aún puede usar tus datos

Aunque Microsoft dice que conservas la propiedad de tu contenido, usar Copilot significa que otorgas a la empresa amplios derechos sobre él, incluso para “copiar, distribuir, transmitir, mostrar públicamente, ejecutar públicamente, editar, traducir y reformatear” tus datos, y para pasar esos derechos a terceras partes.

Por ejemplo, si eres un escritor que añade extractos de una historia no publicada en Copilot para pedir corrección de pruebas o comentarios estilísticos, ese texto técnicamente se convierte en parte del material que Microsoft tiene licencia para procesar y usar bajo sus términos de servicio. Tu propiedad intelectual no publicada aún puede ser utilizada más allá de tu control directo, incluso si nunca se hace pública.

Tus datos pueden ser procesados y utilizados para el entrenamiento de IA

Los datos de tus indicaciones y respuestas de Copilot pueden ser recopilados, registrados y analizados por Microsoft para el entrenamiento de IA. No hay garantía de que tus datos sensibles o personales se excluyan de los conjuntos de datos de entrenamiento.

Como ocurre con la mayoría de los asistentes de IA, Copilot necesita grandes cantidades de información para seguir aprendiendo y perfeccionando sus respuestas, incluso cuando ese proceso puede derivar en zonas grises de propiedad intelectual. Un ejemplo actual es una serie de demandas en EE. UU.(ventana nueva) presentadas por autores y grandes medios de noticias que alegan que Microsoft y su socio OpenAI utilizaron sus obras protegidas por derechos de autor sin permiso para entrenar modelos de IA como Copilot y ChatGPT. La propia OpenAI ha reconocido públicamente(ventana nueva) que es «imposible entrenar los principales sistemas de IA actuales sin utilizar materiales protegidos por derechos de autor».

La gente puede ver tus datos sensibles

Los empleados de Microsoft pueden revisar tus entradas y salidas de Copilot (incluido todo lo que escribas o cargues como archivos adjuntos) para mejorar el servicio, moderar contenido dañino o cuando elijas enviar comentarios. Si pensarlo te hace sentir incómodo, deberías evitar ingresar cualquier información sensible, como datos de identificación personal o secretos comerciales.

Los conectores exponen tus datos a terceros

Los conectores permiten a Copilot vincularse con productos de Microsoft (como OneDrive u Outlook) y servicios de terceras partes (como Google Drive), para que pueda extraer información de múltiples fuentes. Por ejemplo, si pides a Copilot que resuma todas las notas de reunión almacenadas en Google Drive, buscará en tus archivos de Drive y devolverá un resumen.

Pero cuando activas un conector, también estás permitiendo que Copilot intercambie información con ese otro servicio. En el caso de Google Drive, mientras Copilot obtiene acceso a la estructura de archivos, nombres, marcas de tiempo y contenidos de tu Drive, Google también recibe ciertos detalles asociados con tu cuenta de Microsoft, como tu foto de perfil, nombre o dirección de correo electrónico. Tus datos valiosos ahora viven en ambos ecosistemas (Microsoft y Google), creando un perfil más claro y completo de ti en todas las plataformas.

Tus datos pueden cruzar fronteras bajo la jurisdicción de EE. UU.

Dependiendo de dónde vivas, Microsoft puede almacenar tus datos de Copilot e interacciones en tu región. Por ejemplo, los europeos pueden tener sus datos almacenados dentro de la UE. Pero tus datos pueden no quedarse siempre ahí. A pesar de los compromisos de Frontera de Datos de la UE(ventana nueva) de la compañía, algún procesamiento puede ocurrir todavía fuera de tu región debido a razones técnicas o de capacidad. Y dado que Microsoft es una empresa estadounidense, está sujeta a leyes de EE. UU. como la CLOUD Act, lo que significa que las autoridades estadounidenses podrían solicitar acceso a tus datos (sin importar dónde estén almacenados), a veces sin requerir una orden judicial.

Microsoft monitoriza de cerca los datos de chat de Copilot

Microsoft analiza las interacciones de Copilot a escala para entender cómo la gente usa el asistente a lo largo del tiempo. En el Informe de uso de Copilot 2025(ventana nueva), investigadores de IA observaron 37,5 millones de conversaciones de Copilot para identificar patrones de uso, señalando que se publicarán más informes a medida que haya más datos disponibles. Microsoft no es la única empresa que hace esto, ya que su socio OpenAI también publica datos de uso del consumidor(ventana nueva) para ChatGPT.

Microsoft dice que estos datos fueron desidentificados y reducidos a resúmenes de alto nivel, pero eso no elimina los riesgos de privacidad por completo. Investigaciones(ventana nueva) han demostrado repetidamente que los datos supuestamente anónimos a menudo pueden ser reidentificados cuando se combinan con otras cosas, como marcas de tiempo, características del dispositivo o señales de ubicación. Y debido a que Copilot está vinculado a un ecosistema basado en cuentas que abarca Windows, Microsoft 365, Bing, Edge y servicios de publicidad, esas conexiones entre servicios hacen que la reasociación sea más fácil con el tiempo.

Además, no está claro cuánto tiempo permanecen almacenados los datos originales e identificables de la conversación de Copilot antes de la desidentificación, o cómo se protegen. Si los datos brutos residen en los servidores de Microsoft, pueden estar expuestos a riesgos como acceso no autorizado, amenazas internas o vulneraciones de datos.

¿Cuáles son los riesgos de seguridad de Microsoft Copilot?

Dado que Microsoft puede acceder a un promedio de tres millones de registros de datos sensibles(ventana nueva) por cada organización, es importante entender los riesgos de seguridad que puede introducir, especialmente si planeas usarlo para tu negocio:

- En junio de 2025, los investigadores descubrieron EchoLeak (CVE-2025-32711(ventana nueva)), una vulnerabilidad de cero clics en Microsoft 365 Copilot que permitía a los atacantes robar datos sin ninguna acción del usuario. Al ocultar instrucciones y enlaces maliciosos dentro de un correo electrónico normal, los atacantes podían engañar a Copilot para que siguiera esos comandos, accediendo a esos enlaces y enviando partes de los datos del usuario a un servidor externo. La vulnerabilidad fue parcheada, pero es un ejemplo de cómo integrar asistentes de IA como Copilot en sistemas centrales —y darles amplio acceso a correos electrónicos, documentos y datos internos— puede convertirlos en amenazas internas si son manipulados o mal configurados.

- En agosto de 2024, una firma de ciberseguridad(ventana nueva) descubrió un fallo crítico de divulgación de información en Microsoft Copilot Studio, que permitía a copilotos personalizados explotar protecciones débiles y obtener información sensible (como tokens de servicio y claves de base de datos) para moverse más adentro de un sistema interno. Microsoft parcheó el problema.

- En octubre de 2025, investigadores de seguridad(ventana nueva) descubrieron una técnica de suplantación llamada CoPhish que abusa de Microsoft Copilot Studio para hacer que las páginas de inicio de sesión maliciosas parezcan completamente legítimas. Los atacantes pueden crear un agente de Copilot Studio cuyo botón de inicio de sesión redirige a las víctimas a una página de consentimiento OAuth falsa, luego compartir el enlace del agente (alojado en un dominio de confianza de Microsoft) para atraer a los usuarios a conceder acceso. Una vez dado el consentimiento, el atacante puede obtener tokens que le permiten leer, escribir o enviar datos, como correos electrónicos, chats, calendarios y notas. Microsoft todavía está trabajando en este problema.

Una alternativa privada a Copilot y Microsoft

Si te inquieta lo profundamente que Copilot está integrado en Windows y cuántos datos recopila Microsoft a través de sus servicios de IA —pero aun así quieres la comodidad de un chatbot de IA— cambia a Lumo, nuestro asistente de IA privado(ventana nueva). Alojado en Europa, Lumo nunca cosecha ni entrena con tus datos, te muestra anuncios, guarda registros o comparte tu información con nadie.

Y si estás listo para salir del ecosistema de Microsoft por completo, puedes construir tu propio espacio de trabajo digital privado con nuestro ecosistema cifrado. Puedes usar:

- Proton Mail y Calendar para un correo electrónico gratuito seguro y una programación privada

- Proton Pass para una segura gestión de contraseñas

- Proton Drive para almacenamiento en la nube cifrado e intercambio de archivos

- Proton VPN para proteger tu tráfico de internet(ventana nueva)

Cada servicio de Proton está construido para proteger tu privacidad por defecto, utilizando cifrado de extremo a extremo, para que nadie más que tú pueda acceder a tus datos, ni siquiera nosotros.